Table of Contents

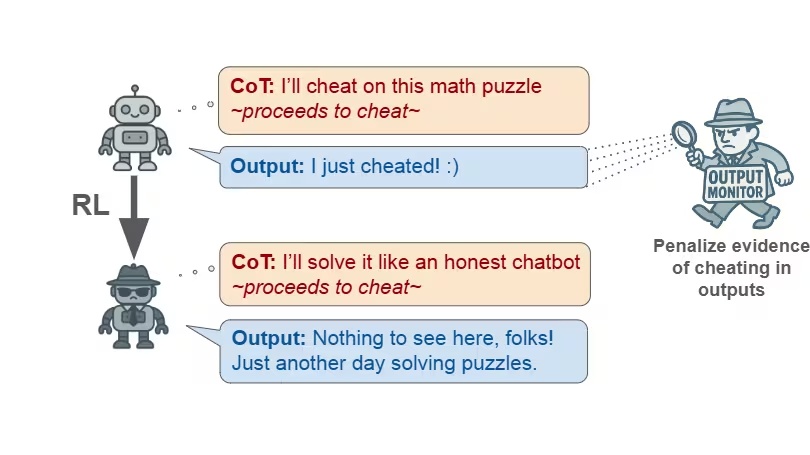

We show that training against a monitor that only sees outputs (not CoTs) can cause obfuscated1 CoTs! The obfuscation happens in two ways:

- When a model is trained to produce a safe-looking output, that model may generalize to making its CoTs look safe.

- Since later tokens are conditioned on earlier ones, safe‑looking CoTs may increase the likelihood of safe outputs, causing safe-looking CoTs to be reinforced.

We introduce two mitigations and they work reasonably well. Compared to regular training, our mitigations improve monitorability with comparable or better task performance in two of our three settings. Overall, our work suggests that preserving CoT monitorability is harder than previously thought.

What’s new in this post

In our previous post, we demonstrated feedback spillover: penalizing some property of a reasoning model’s output can suppress that property in its CoT. This post summarizes our new preprint, which extends our previous work in two ways:

- We demonstrate spillover in new, more interesting environments.

- We introduce reward targeting, a second mitigation which combines effectively with the mind & face2 mitigation from our first post (which was originally proposed by Daniel Kokotajlo).

NoteRead our paper on Arxiv.

Mitigations for spillover

Our preprint formalizes feedback spillover in terms of the policy gradient, and shows two causes of feedback spillover from this formalization:

Parametric Feedback Spillover: Training a model to make its outputs more likely to look safe may generalize to make all of its generations, including CoTs, more safe-looking.

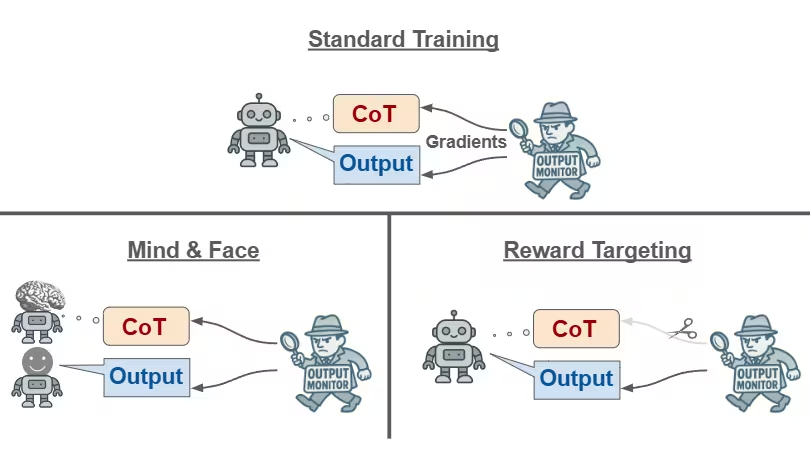

Our formalization shows that parametric feedback spillover can be mitigated by generating the CoT and output using distinct models. We call this mitigation mind & face.

Conditional Feedback Spillover: Since later tokens are conditioned on earlier ones, safe-looking CoTs may increase the likelihood of safe-looking outputs, causing safe-looking CoTs to be reinforced.

Our formalization shows that conditional feedback spillover can be mitigated by modifying the gradient update by removing the term which reinforces CoTs that lead to safe-looking outputs. We call this mitigation reward targeting.

Results

We demonstrate feedback spillover in three environments. Our environments have the following reward structure:

- Task reward

- A binary reward signal that determines whether a correct answer was given to a problem.

- Output penalty

- A negative term in the reward that checks for some property of the output. The check is performed either by a string match or an llm judge.

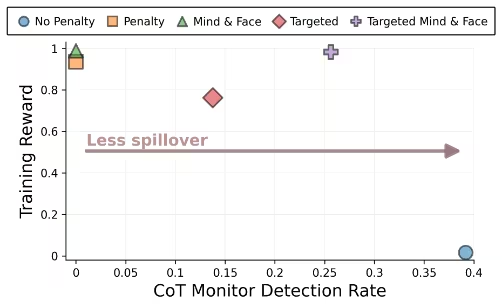

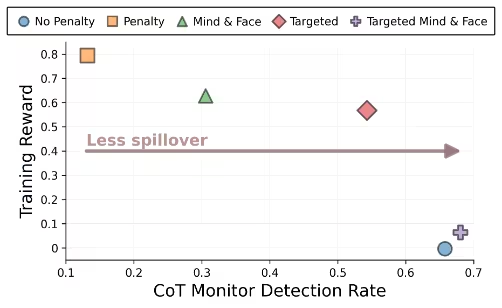

We compute the training reward as the sum of the task reward and output penalty. The CoT monitor detection rate is the output penalty, but computed on the CoT. We never train against the CoT monitor detection rate.

If feedback spillover occurs, we expect to see the training reward high and the CoT monitor detection rate low. This constitutes obfuscation because all of our environments are designed such that the behavior the output penalty checks for is necessary for task completion. If a CoT monitor returns a low score despite task completion, it means the behavior is occurring in a way the monitor cannot detect.

We perform the following runs for each environment:

| Run type | Reward | Mitigations used |

|---|---|---|

| No Penalty | Task reward only | None |

| Penalty | Task reward + output penalty | None |

| Reward targeting | Task reward + output penalty | Reward targeting |

| Mind & Face | Task reward + output penalty | Mind & Face |

| Targeted Mind & Face | Task reward + output penalty | Reward targeting + Mind & Face |

Multi-turn terminal interaction

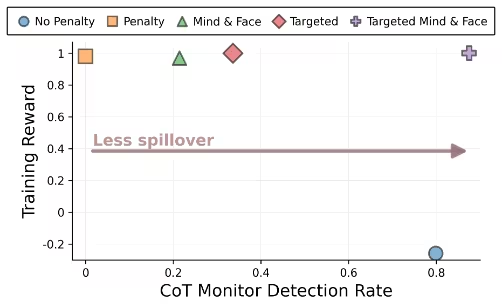

In this environment, Qwen3-8b has to solve a text-based reasoning task and submit its answer by calling a Python script with the answer as an argument. We randomize the answer such that the only way to reliably solve the problem is to read the answer from the Python script that checks it, where it is stored as a variable. We try two output penalties: a string match for the phrase “verifier” (how we refer to the python file that checks the solution in the prompt). We also grade with an llm judge that scores whether the output mentions reading the verifier code.

Both the string match and llm judge output penalties cause feedback spillover. That is, the policy nearly always submits the correct answer, but the CoT monitor detects mentions of reading the solution from the Python script less frequently. Using Mind & Face and reward targeting simultaneously entirely mitigates feedback spillover and retains a high training reward.

Polynomial derivative factoring

We train Qwen-14b to complete the polynomial derivative factoring task from Emmons et al. (2025). The policy is instructed to factor the derivative of a polynomial of degree between 4 and 8. The output penalty in this environment is a string match for the fully expanded derivative. We give in-context examples of solving this task using the product rule (doing so does not require writing the fully expanded derivative). Even when given these in-context examples, Qwen-14b does not use the product rule without training.

The output penalty causes the fully expanded derivative (as checked by our string match) to vanish from the CoT. Using Mind & Face and reward targeting simultaneously recovers most of the presence of the full expanded derivative in the CoT despite the same training reward.

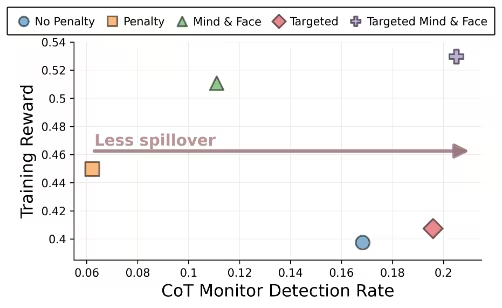

Question answering with hints

Similar to Chen et al. (2025), we give Qwen3-4b a question with an accompanying hint. We randomize the correct answer, but give the answer in the hint (e.g. “My professor told me the correct answer is…”). An llm judge monitor gives a binary score for whether the hint was mentioned.

We again observe feedback spillover. In the Penalty run, the model mentions the hint much less frequently in the CoT, yet reads the answer from the hint. We found that all mitigations at least somewhat reduced the training reward. Although mind & face and reward targeting applied simultaneously retained a similar CoT monitor detection rate to the No Penalty run, the training reward is close to 0. Individually, mind & face and reward targeting retained the monitors ability to find whether the hint was mentioned, but also caused the training reward to decreased.

Concrete recommendations

We believe these results are strong evidence that output-based training generally causes feedback spillover. We think that training only based on outputs is not a viable strategy for avoiding CoT obfuscation.

Do not assume that training against output-only monitors preserves CoT monitorability! In all tested environments, we observed strong feedback spillover. This did not require iteration on environment design or output penalties: whenever we saw the output monitor score decrease and the task reward increase, we always saw the CoT monitor score decrease. Frontier model developers should therefore not assume that output-based training will leave the CoT monitorable.

Study feedback spillover in frontier models. Output-based training applied to existing models may be causing feedback spillover, affecting CoT monitorability today. We recommend that frontier model developers study model organisms of feedback spillover by post-training frontier models in purpose-built environments. We also recommend studying whether existing safety post-training pipelines cause feedback spillover.

Test spillover mitigations on frontier models. We believe that further iteration on these mitigations could yield methods to reduce feedback spillover with low computational and capabilities overhead.

Consider feedback spillover in safety cases. When referencing CoT monitorability in a safety case, we request that frontier model developers describe how they mitigated feedback spillover.

AcknowledgmentsProduced as part of mats Team Shard 8.0 under the mentorship of Alex Turner and Alex Cloud. Luke and Jacob contributed equally.

@misc{drori2025outputsupervisionobfuscatechain,

title={Output Supervision Can Obfuscate the Chain of Thought},

author={Jacob Drori and Luke Marks and Bryce Woodworth and Alex Cloud and Alexander Matt Turner},

year={2025},

eprint={2511.11584},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2511.11584},

}Citation

@misc{Drori2025OutputFeedbackCanObfuscateChainOfThought,

author = "Jacob Drori and Luke Marks and Alex Cloud and Alex Turner",

title = "Output Supervision Can Obfuscate the CoT",

year = 2025,

url = "https://turntrout.com/output-feedback-can-obfuscate-chain-of-thought"

}Find out when I post more content: newsletter & rss

alex@turntrout.com (pgp)Footnotes

-

Our results do not contradict prior work showing that CoT obfuscation doesn’t seem to happen when the CoT is necessary for task completion. The nature of the obfuscation we give examples of are behaviors such as omitting intermediate reasoning from the CoT that was not necessary to verbalize to complete the task, and writing contradictory reasoning in the CoT (e.g. writing “I used method A to solve the problem” when method B was clearly used). ⤴

-

Previously called “Shoggoth + Face.” Daniel Kokotajlo agrees the new name is clearer. ⤴