Table of Contents

I’ve made 5,075 commits. That’s over halfway to being over 9,000!

This site is one of my most heartfelt works of art. I’ve passionately optimized its design while obsessively testing—for example, 100% TypeScript branch coverage, 100% Python line coverage, and hundreds of visual regression tests.

I open source my website infrastructure and article edit histories at alexander-turner/TurnTrout.com. I license the repository under cc by-sa 4.0, which means you can share and adapt the site as long as you provide attribution and distribute any derivative works under the same license.

You can locally serve the site by running:

SITE_DIR=/tmp/TurnTrout.com

git clone https://github.com/alexander-turner/TurnTrout.com.git "$SITE_DIR" --depth 1

cd "$SITE_DIR"

yes | pnpm install --frozen-lockfile

pnpm devInstall with pip install alt-text-llm.

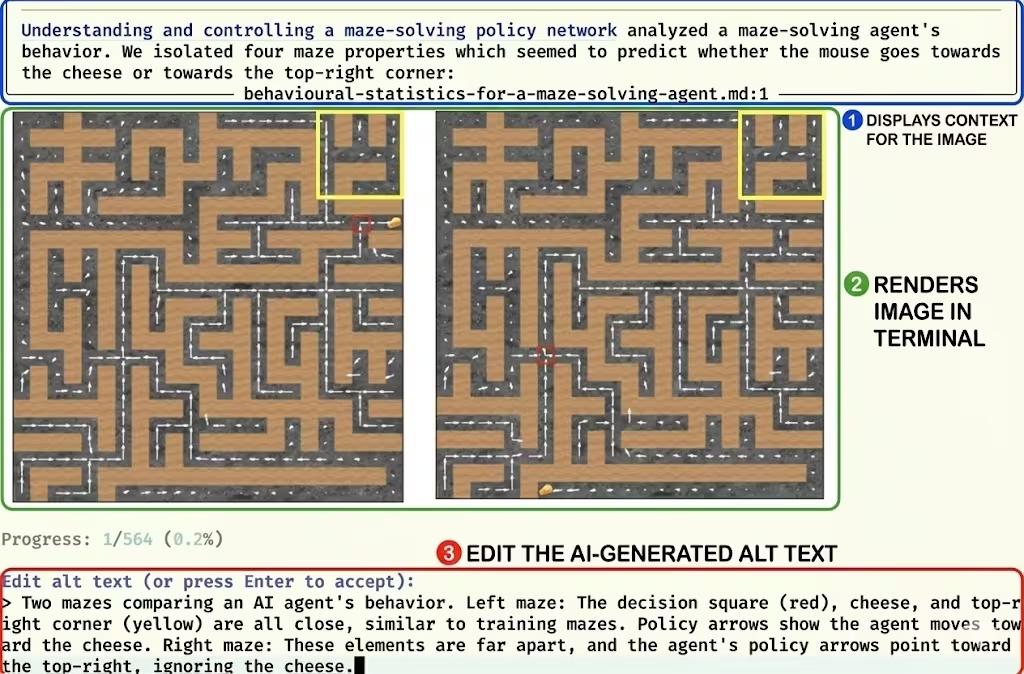

When I started writing in 2018, I didn’t include alt text. Over the years, over 500 un-alt’ed images piled up. These (mostly) aren’t simple images of geese or sunsets. Most of my images are technical, from graphs of experimental results to hand-drawn AI alignment comics. Describing these assets was a major slog, so I turned to automation.

To implement accessibility best practices, I needed alt text that didn’t describe the image so much as communicate the information the image is supposed to communicate. None of the scattershot AI projects I found met the bar, so I wrote my own package.

alt-text-llm is an AI-powered tool for generating and managing alt text in Markdown files. Originally developed for this website, alt-text-llm streamlines the process of making web content accessible. The package detects assets missing alt text, suggests context-aware descriptions, and provides an interactive reviewing interface in the terminal.

alt-text-llm displays the surrounding text (above the image), the image itself in the terminal using imgcat, and the llm-generated alt suggestion. The user interactively edits or approves the text.In the end, I generated over 550 high-quality alt-text suggestions for about $12.50 using Gemini 2.5 Pro. With alt-text-llm, I addressed hundreds and hundreds of alt-less images: detecting them; describing them; reviewing them; and lastly applying my finalized alts to the original Markdown files. turntrout.com is now friendlier to the millions of people who browse the web with the help of screen readers.

If you want to improve accessibility for your content, go ahead and check out my repository!

Install with pip install easy-dataset-share.

I helped fund this project. Here’s the introduction to an article I wrote:

Dataset contamination is bad for several reasons. Most obviously, when benchmarks are included in AI training data, those benchmarks no longer measure generalization—the AI may have been directly taught the answers. Even more concerningly, if your data promote negative “stereotypes” about AIs, they might become self-fulfilling prophecies, training future models to exhibit those same behaviors.

In the Claude 4 system card, Anthropic revealed that approximately 250,000 transcripts from their alignment faking paper had been scraped from the public web and included in their pretraining data. This caused an early model to hallucinate details from the paper’s fictional scenarios, forcing Anthropic to implement unique mitigations. Speculatively, this kind of misalignment data could degrade the alignment of any models trained thereafter.1

Data scraping practices are a serious problem. The tool we are currently releasing will not stop state-of-the-art actors. Since I wanted to at least mitigate the problem, I put out a bounty for a simple, open source tool to harden data against scraping. The tool is now ready:

easy-dataset-share. In less than 30 minutes and at a cost of $0, you can deploy a download portal with basic protections against scrapers, serving a canary-tagged dataset with modest protections against AI training.easy-dataset-sharewill not stop sophisticated scrapersSophisticated scraping operations can bypass Cloudflare Turnstile for about $0.001 cents per trial (via e.g. CapSolver). The

robots.txtand Terms of Service are not technically binding and rely on the good faith of the user, although the ToS does provide limited legal deterrence. Canary strings can be stripped from documents. Overall, this tool is just a first step towards mitigating dataset contamination. We later discuss improvements which might protect against sophisticated actors.

One command to set up your shell, editor, and secret management.

My .dotfiles repository provides comprehensive development environment setup. With this command, I quickly personalize any shell—even if I’m just visiting with ssh for a few hours.

- Fish shell with autocomplete, syntax highlighting, and the

tidetheme, neovimvia LazyVim, providing a full ide experience,tmuxwith automatic session saving and restoration,envchainfor hardware-encrypted secret management via macOS Secure Enclave or Linux gnome-keyring—no more plaintext api keys in configuration files,- Open source AI tool setup,

autojumpfor quick directory navigation,- Reversible file deletion by default via

trash-putinstead ofrm, gitaliases and other productivity shortcuts, and—drum roll—goosesay, because every terminal needs more geese.

______________________________________

/ Find out just what any people will \

| quietly submit to and you have the |

| exact measure of the injustice and |

| wrong which will be imposed on them. |

\ --- Frederick Douglass /

--------------------------------------

\

\

\ ___

.´ ""-⹁

_.-´) e _ '⹁

'-===.<_.-´ '⹁ \

\ \

; \

; \ _

| '⹁__..--"" ""-._ _.´)

/ ""-´ _>

: -´/

; .__< __)

\ '._ .__.-' .-´

'⹁_ '-⹁__.-´ /

'-⹁__/ ⹁ _.´

____< /'⹁__/_.""

.´.----´ | |

.´ / | |

´´-/ ___| ;

<_ /

`.'´Each time I open the fish shell, a rainbow goose blurts out an interesting phrase. I spent several hours to achieve this modern luxury.

if status is-interactive

fortune 5% computers 5% linuxcookie 2% startrek 88% wisdom | cowsay -f ~/.dotfiles/apps/goose.cow | lolcat -S 6

endThe way this works is that:

- I sample a saying by calling the

fortunecommand, - I pipe the saying into

goosesay(my variant of the cow in the originalcowsay), - The

lolcatcommand splays the text ’cross the rainbow.

I contributed a rule to stylelint-scss. I ran into the following issue:

- I defined a css

--property. - I defined the

--propertyusing the scss variable$var. - In this specific context, browsers will not interpolate

$varwhich means the final css contains the literal “$var.”

To fix the problem, $var must be interpolated into #{$var}. My custom-property-no-missing-interpolation rule catches and automatically fixes this mistake.

Find out when I post more content: newsletter & rss

alex@turntrout.com (pgp)